VIRSH: Virtualizing a Physical Rocky 8 Linux Machine

Let’s just jump right in into virtualizing a KVM based Physical Server using various KVM tools such as Virsh, Cockpit etc. Will also introduce a twist by configuring bonding at the end, not the beginning, to document a retrofit to an existing environment. Begin by identifying how the various network interfaces that will make up the setup:

[root@dl380g6-p02 network-scripts]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: enp2s0f0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 78:e7:d1:8f:4d:26 brd ff:ff:ff:ff:ff:ff

inet 10.3.0.10/24 brd 10.3.0.255 scope global noprefixroute enp2s0f0

valid_lft forever preferred_lft forever

inet6 fe80::7ae7:d1ff:fe8f:4d26/64 scope link

valid_lft forever preferred_lft forever

3: enp2s0f1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 78:e7:d1:8f:4d:28 brd ff:ff:ff:ff:ff:ff

4: enp3s0f0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 78:e7:d1:8f:4d:2a brd ff:ff:ff:ff:ff:ff

5: enp3s0f1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 78:e7:d1:8f:4d:2c brd ff:ff:ff:ff:ff:ff

[root@dl380g6-p02 network-scripts]#

Begin by installing libvirt (These two commands must be separate for some reason.):

dnf install @virt

dnf install libvirt-devel virt-top libguestfs-tools wget virt-install virt-viewer

Or in case the above doesn’t work (Bridging might not work: https://wiki.linuxfoundation.org/networking/bridge ):

dnf install libvirt virt-viewer virt-manager qemu-kvm virt-install virt-top libguestfs-tools -y

dnf install bridge-utils -y # ( May not work, use iproute2 instead. See above link. )

Download Rocky Linux 9 ISO (In this case in /mnt/iso-images folder, after creating it ):

# cd /mnt/iso-images && wget https://download.rockylinux.org/pub/rocky/9/isos/x86_64/Rocky-9.3-x86_64-minimal.iso

Create the directory where thin provisioned drives will exist, ideally, on a large drive that’s well configured for failure, such as a RAID 6 etc:

Disk /dev/sda: 7.45 TiB, 8193497718784 bytes, 16002925232 sectors

Create the LV’s using this storage. In this case, LVM w/ RAID 6 and XFS is used since it was successful in our other deployments. ZFS is a candidate however given further reading on posts such as these here, the former approach was selected. Let’s create:

# pvcreate /dev/sda

# vgcreate raid6kvm01vg /dev/sda

# lvcreate -L+7TB -n raid6kvm01lv raid6kvm01vg

# mkfs.xfs -l size=64M -d agcount=32 -i attr=2,maxpct=5 -b size=4k -L kvm /dev/raid6kvm01vg/raid6kvm01lv -f

# blkid /dev/raid6kvm01vg/raid6kvm01lv

# cat /etc/fstab

UUID=856503af-b255-48c4-84fb-e4942dc5ec8e /mnt/raid6kvm01 xfs defaults,logbufs=8,allocsize=64K,noatime,nodiratime,nofail 0 0

# mkdir /mnt/raid6kvm01

# mount -a

Alternative, if the main storage is where the OS drive lives, that will likely be your target:

[root@dl380g6-p02 mnt]# mkdir kvm-drives

[root@dl380g6-p02 mnt]# cd kvm-drives/

[root@dl380g6-p02 kvm-drives]# pwd

/mnt/kvm-drives

[root@dl380g6-p02 kvm-drives]#

Enable libvirtd and confirm:

# virsh list –all

# systemctl enable –now libvirtd

# systemctl status libvirtd

Create the disks for the TEST VM:

# qemu-img create -f qcow2 /mnt/kvm-drives/mc-rocky01.nix.mds.xyz-disk01.qcow2 32G

# qemu-img create -f qcow2 /mnt/kvm-drives/mc-rocky01.nix.mds.xyz-disk02.qcow2 64G

OR

# qemu-img create -f qcow2 /mnt/raid6kvm01/mc-rocky01.nix.mds.xyz-disk01.qcow2 32G

# qemu-img create -f qcow2 /mnt/raid6kvm01/mc-rocky01.nix.mds.xyz-disk02.qcow2 64G

Then check them:

# qemu-img info /mnt/kvm-drives/mc-rocky01.nix.mds.xyz-disk01.qcow2

# qemu-img info /mnt/kvm-drives/mc-rocky01.nix.mds.xyz-disk02.qcow2

# qemu-img info /mnt/kvm-drives/mc-rocky01.nix.mds.xyz-disk01.qcow2

image: /mnt/kvm-drives/mc-rocky01.nix.mds.xyz-disk01.qcow2

file format: qcow2

virtual size: 32 GiB (34359738368 bytes)

disk size: 196 KiB

cluster_size: 65536

Format specific information:

compat: 1.1

compression type: zlib

lazy refcounts: false

refcount bits: 16

corrupt: false

extended l2: false

# qemu-img info /mnt/kvm-drives/mc-rocky01.nix.mds.xyz-disk02.qcow2

image: /mnt/kvm-drives/mc-rocky01.nix.mds.xyz-disk02.qcow2

file format: qcow2

virtual size: 64 GiB (68719476736 bytes)

disk size: 196 KiB

cluster_size: 65536

Format specific information:

compat: 1.1

compression type: zlib

lazy refcounts: false

refcount bits: 16

corrupt: false

extended l2: false

[root@dl380g6-p02 kvm-drives]#

Clear all previous definitions in nmcli. For example:

# nmcli c delete enp2s0f0

# nmcli c delete enp2s0f1

# nmcli c delete enp3s0f0

# nmcli c delete enp3s0f1

# nmcli c delete br0

# nmcli c delete bridge-slave-enp2s0f0

Define bridged networking:

# virsh net-list –all

# nmcli con add ifname br0 type bridge con-name br0 ipv4.addresses 10.3.0.10/24 ipv4.gateway 10.3.0.1 ipv4.dns “192.168.0.46 192.168.0.51 192.168.0.224” ipv4.method manual

# nmcli con add type bridge-slave ifname enp2s0f0 master br0

# nmcli c s

Next, bring the physical interface offline and the bridge interface online. This is best done via the console since networking will be offline causing you to loose connection. Check and verify:

# nmcli c down enp2s0f0

# nmcli c up br0

# nmcli c show

# nmcli c show –active

# virsh net-list –all

IMPORTANT: If the default route is missing which can be confirmed with ip r or netstat -nr, add it in otherwise reaching out to the internet will not work. For example:

ip route add 192.168.0.0/24 dev net0

ip route add default via 192.168.0.1 dev net0

Next, define the br0 interface in virsh. Save this content to br0.xml:

<network> <name>br0</name> <forward mode="bridge"/> <bridge name="br0" /> </network>

Next, import the configuration:

# virsh net-define ./br0.xml

Enable autostart and verify:

# virsh net-start br0

# virsh net-autostart br0

# virsh net-list –all

Create a virtual machine:

# virt-install \

–name mc-rocky01.nix.mds.xyz \

–ram 4096 \

–vcpus 4 \

–disk path=/mnt/kvm-drives/mc-rocky01.nix.mds.xyz-disk01.qcow2 \

–disk path=/mnt/kvm-drives/mc-rocky01.nix.mds.xyz-disk02.qcow2 \

–os-variant centos-stream9 \

–os-type linux \

–network bridge=br0,model=virtio \

–graphics vnc,listen=0.0.0.0 \

–console pty,target_type=serial \

–location /mnt/iso-images/Rocky-9.1-x86_64-minimal.iso

NOTE: If the image is in a location that is not accessible, such as /root/, this error will be seen:

ERROR internal error: process exited while connecting to monitor: 2023-01-23T01:54:21.710369Z qemu-kvm: -blockdev {“driver”:”file”,”filename”:”/root/Rocky-9.1-x86_64-minimal.iso”,”node-name”:”libvirt-1-storage”,”auto-read-only”:true,”discard”:”unmap”}: Could not open ‘/root/Rocky-9.1-x86_64-minimal.iso’: Permission denied

ANOTHER NOTE: If using –extra-args=’console=ttyS0′ to the above virt-install or –nographics, the VNC, SPICE or other graphics options will be skipped and a text based installation will begin. In this case, the VNC route will be taken though SPICE will also be discussed.

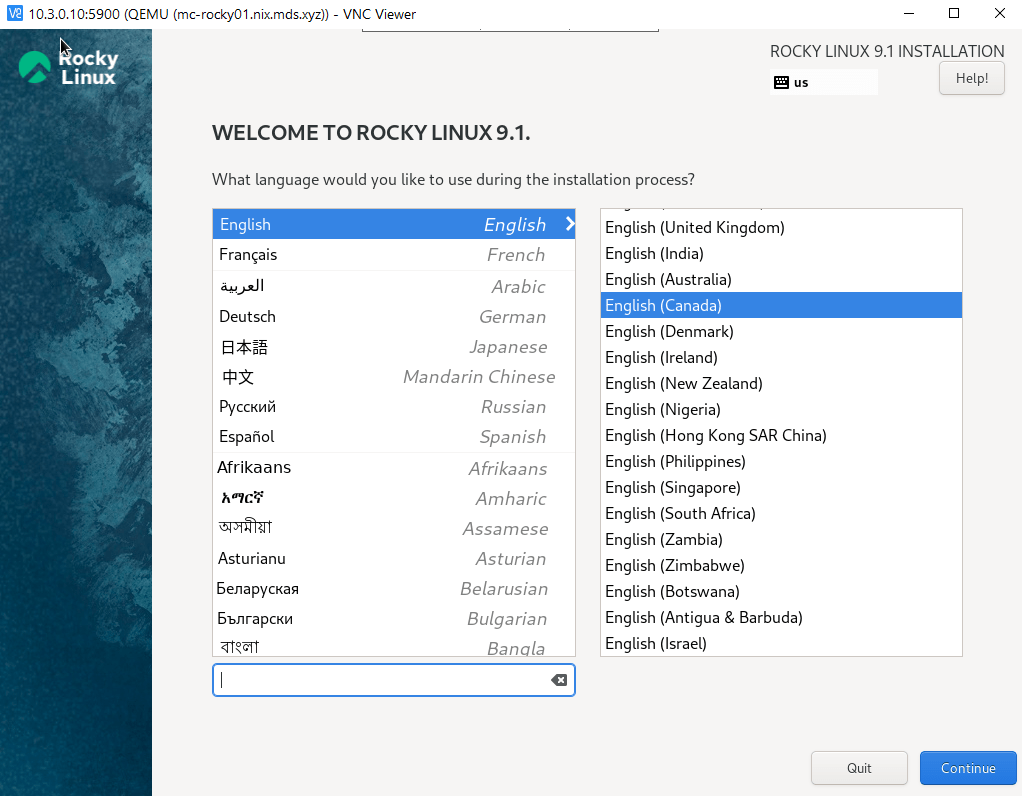

Login to the console and monitor the installation, answering any questions in the process:

# virsh

Welcome to virsh, the virtualization interactive terminal.

Type: ‘help’ for help with commands

‘quit’ to quit

virsh #

virsh # list

Id Name State

—————————————-

2 mc-rocky01.nix.mds.xyz running

virsh # console mc-rocky01.nix.mds.xyz

Connected to domain ‘mc-rocky01.nix.mds.xyz’

Escape character is ^] (Ctrl + ])

If there’s not activity, look for a message such as this:

WARNING Unable to connect to graphical console: virt-viewer not installed. Please install the ‘virt-viewer’ package.

WARNING No console to launch for the guest, defaulting to –wait -1

Install virt-viewer if not already (see above). Once installed, find the port on which your new virtual machine is running on:

# netstat -pnltu|grep -Ei qemu

tcp 0 0 0.0.0.0:5900 0.0.0.0:* LISTEN 7141/qemu-kvm

And verify the machine name from the process table output:

# ps -ef | grep -Ei 7141

qemu 7141 1 19 20:56 ? 00:01:42 /usr/libexec/qemu-kvm -name guest=mc-rocky01.nix.mds.xyz,debug-threads=on -S -object {“qom-type”:”secret”,”id”:”masterKey0″,”format”:”raw”,”file”:”/var/lib/libvirt/qemu/domain-2-mc-rocky01.nix.mds.x/master-key.aes”} -machine pc-q35-rhel8.6.0,usb=off,dump-guest-core=off,memory-backend=pc.ram -accel kvm -cpu Nehalem-IBRS,vme=on,pdcm=on,x2apic=on,tsc-deadline=on,hypervisor=on,arat=on,tsc-adjust=on,umip=on,stibp=on,arch-capabilities=on,ssbd=on,rdtscp=on,ibpb=on,ibrs=on,amd-stibp=on,amd-ssbd=on,skip-l1dfl-vmentry=on,pschange-mc-no=on -m 4096 -object {“qom-type”:”memory-backend-ram”,”id”:”pc.ram”,”size”:4294967296} -overcommit mem-lock=off -smp 4,sockets=4,cores=1,threads=1 -uuid 9ba74db1-1cdf-4940-b108-e9ad9cdb31b5 -no-user-config -nodefaults -chardev socket,id=charmonitor,fd=39,server=on,wait=off -mon chardev=charmonitor,id=monitor,mode=control -rtc base=utc,driftfix=slew -global kvm-pit.lost_tick_policy=delay -no-hpet -no-shutdown -global ICH9-LPC.disable_s3=1 -global ICH9-LPC.disable_s4=1 -boot strict=on -kernel /var/lib/libvirt/boot/virtinst-q_1nkyy8-vmlinuz -initrd /var/lib/libvirt/boot/virtinst-nhyry5q7-initrd.img -device pcie-root-port,port=16,chassis=1,id=pci.1,bus=pcie.0,multifunction=on,addr=0x2 -device pcie-root-port,port=17,chassis=2,id=pci.2,bus=pcie.0,addr=0x2.0x1 -device pcie-root-port,port=18,chassis=3,id=pci.3,bus=pcie.0,addr=0x2.0x2 -device pcie-root-port,port=19,chassis=4,id=pci.4,bus=pcie.0,addr=0x2.0x3 -device pcie-root-port,port=20,chassis=5,id=pci.5,bus=pcie.0,addr=0x2.0x4 -device pcie-root-port,port=21,chassis=6,id=pci.6,bus=pcie.0,addr=0x2.0x5 -device pcie-root-port,port=22,chassis=7,id=pci.7,bus=pcie.0,addr=0x2.0x6 -device pcie-root-port,port=23,chassis=8,id=pci.8,bus=pcie.0,addr=0x2.0x7 -device qemu-xhci,p2=15,p3=15,id=usb,bus=pci.2,addr=0x0 -device virtio-serial-pci,id=virtio-serial0,bus=pci.3,addr=0x0 -blockdev {“driver”:”file”,”filename”:”/mnt/kvm-drives/mc-rocky01.nix.mds.xyz-disk01.qcow2″,”node-name”:”libvirt-3-storage”,”auto-read-only”:true,”discard”:”unmap”} -blockdev {“node-name”:”libvirt-3-format”,”read-only”:false,”driver”:”qcow2″,”file”:”libvirt-3-storage”,”backing”:null} -device virtio-blk-pci,bus=pci.4,addr=0x0,drive=libvirt-3-format,id=virtio-disk0,bootindex=1 -blockdev {“driver”:”file”,”filename”:”/mnt/kvm-drives/mc-rocky01.nix.mds.xyz-disk02.qcow2″,”node-name”:”libvirt-2-storage”,”auto-read-only”:true,”discard”:”unmap”} -blockdev {“node-name”:”libvirt-2-format”,”read-only”:false,”driver”:”qcow2″,”file”:”libvirt-2-storage”,”backing”:null} -device virtio-blk-pci,bus=pci.5,addr=0x0,drive=libvirt-2-format,id=virtio-disk1 -blockdev {“driver”:”file”,”filename”:”/mnt/iso-images/Rocky-9.1-x86_64-minimal.iso”,”node-name”:”libvirt-1-storage”,”auto-read-only”:true,”discard”:”unmap”} -blockdev {“node-name”:”libvirt-1-format”,”read-only”:true,”driver”:”raw”,”file”:”libvirt-1-storage”} -device ide-cd,bus=ide.0,drive=libvirt-1-format,id=sata0-0-0 -netdev tap,fd=40,id=hostnet0,vhost=on,vhostfd=42 -device virtio-net-pci,netdev=hostnet0,id=net0,mac=52:54:00:cb:13:ba,bus=pci.1,addr=0x0 -chardev pty,id=charserial0 -device isa-serial,chardev=charserial0,id=serial0 -chardev socket,id=charchannel0,fd=38,server=on,wait=off -device virtserialport,bus=virtio-serial0.0,nr=1,chardev=charchannel0,id=channel0,name=org.qemu.guest_agent.0 -device usb-tablet,id=input0,bus=usb.0,port=1 -audiodev {“id”:”audio1″,”driver”:”none”} -vnc 0.0.0.0:0,audiodev=audio1 -device VGA,id=video0,vgamem_mb=16,bus=pcie.0,addr=0x1 -device virtio-balloon-pci,id=balloon0,bus=pci.6,addr=0x0 -object {“qom-type”:”rng-random”,”id”:”objrng0″,”filename”:”/dev/urandom”} -device virtio-rng-pci,rng=objrng0,id=rng0,bus=pci.7,addr=0x0 -sandbox on,obsolete=deny,elevateprivileges=deny,spawn=deny,resourcecontrol=deny -msg timestamp=on

# ip a

9: br0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 78:e7:d1:8f:4d:26 brd ff:ff:ff:ff:ff:ff

inet 10.3.0.10/24 brd 10.3.0.255 scope global noprefixroute br0

valid_lft forever preferred_lft forever

inet6 fe80::1af2:4625:48b7:9030/64 scope link noprefixroute

valid_lft forever preferred_lft forever

Next, let’s connect to the graphical interface by specifying the IP and above VNC port to view the Graphical install. Before we do so, we’ll need a plugin for our Chrome first:

Chrome Web Store

Home / Apps / Spice Client

Or just search on google. Once installed, click on launch app to login to a client. However, this failed for us. Instead, let’s download the Win x64 client instead:

https://virt-manager.org/download/

Look for the Win x64 MSI (gpg) text on the page. Virt-viewer will get instaleld in something like C:\Program Files\VirtViewer v11.0-256. Browse to C:\Program Files\VirtViewer v11.0-256\bin folder then start remote-viewer.exe:

However, the above will only work if the graphics specified is spice:

–graphics spice,listen=0.0.0.0

The corresponding element in the xml files is:

<graphics type=’spice’ autoport=’yes’ listen=’0.0.0.0′>

<listen type=’address’ address=’0.0.0.0’/>

</graphics>

However, in our case we used VNC. In this case, the VNC Viewer is required. That’s a different kind of animal:

https://www.realvnc.com/en/connect/download/viewer/

Establish a connection and continue with the Rocky 9 setup:

Continue with the install making the appropriate selections. Note the dual disk drives specified in the virt-install command. They’re available for our install:

Suppose Network parameters could have been configured however, point is to test DHCP across the bridge interface br0. Once installed:

# virt-install \

> –name mc-rocky01.nix.mds.xyz \

> –ram 4096 \

> –vcpus 4 \

> –disk path=/mnt/kvm-drives/mc-rocky01.nix.mds.xyz-disk01.qcow2 \

> –disk path=/mnt/kvm-drives/mc-rocky01.nix.mds.xyz-disk02.qcow2 \

> –os-variant centos-stream9 \

> –os-type linux \

> –network bridge=br0,model=virtio \

> –graphics vnc,listen=0.0.0.0 \

> –console pty,target_type=serial \

> –location /mnt/iso-images/Rocky-9.1-x86_64-minimal.iso

WARNING Unable to connect to graphical console: virt-viewer not installed. Please install the ‘virt-viewer’ package.

WARNING No console to launch for the guest, defaulting to –wait -1

Starting install…

Retrieving file vmlinuz… | 11 MB 00:00:00

Retrieving file initrd.img… | 88 MB 00:00:00

Domain is still running. Installation may be in progress.

Waiting for the installation to complete.

Domain has shutdown. Continuing.

Domain creation completed.

Restarting guest.

#

verify the IP given once the machine is back up (If prompted for a disk password, since we choose encryption, enter it and proceed with the boot):

Take the time to set the hostname, as per the above image:

# hostnamectl set-hostname mc-rocky01.nix.mds.xyz

Now it’s time to test the connectivity from our Windows 10 Laptop:

Using username “root”.

root@10.3.0.179’s password:

Last login: Sun Jan 22 22:19:46 2023

[root@mc-rocky01 ~]#

[root@mc-rocky01 ~]#

[root@mc-rocky01 ~]#

[root@mc-rocky01 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: enp1s0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 52:54:00:cb:13:ba brd ff:ff:ff:ff:ff:ff

inet 10.3.0.179/24 brd 10.3.0.255 scope global dynamic noprefixroute enp1s0

valid_lft 3107sec preferred_lft 3107sec

inet6 fe80::5054:ff:fecb:13ba/64 scope link noprefixroute

valid_lft forever preferred_lft forever

[root@mc-rocky01 ~]#

[root@mc-rocky01 ~]#

[root@mc-rocky01 ~]# cat /etc/resolv.conf

# Generated by NetworkManager

search nix.mds.xyz mws.mds.xyz mds.xyz

nameserver 192.168.0.46

nameserver 192.168.0.51

nameserver 192.168.0.224

[root@mc-rocky01 ~]#

Note how the DHCP server populated all DNS servers according to the DHCP configuration defined. Hence external resolution from the KVM guest works and is able to reach out to online sites and resources. Virsh lists a running machine:

[root@dl380g6-p02 iso-images]# virsh list –all

Id Name State

—————————————-

3 mc-rocky01.nix.mds.xyz running

[root@dl380g6-p02 iso-images]#

and fdisk from the guest KVM machine lists the correct drives:

[root@mc-rocky01 ~]# fdisk -l

Disk /dev/vda: 32 GiB, 34359738368 bytes, 67108864 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: dos

Disk identifier: 0xfbc22483

Device Boot Start End Sectors Size Id Type

/dev/vda1 * 2048 2099199 2097152 1G 83 Linux

/dev/vda2 2099200 67108863 65009664 31G 83 Linux

Disk /dev/vdb: 64 GiB, 68719476736 bytes, 134217728 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

…………………….

[root@mc-rocky01 ~]

Check block ID’s:

[root@mc-rocky01 ~]# blkid /dev/vdb

[root@mc-rocky01 ~]# blkid /dev/vda

/dev/vda: PTUUID=”fbc22483″ PTTYPE=”dos”

[root@mc-rocky01 ~]# blkid /dev/vda1

/dev/vda1: UUID=”6d234e64-426d-46d1-a0de-fb4dd0080283″ TYPE=”xfs” PARTUUID=”fbc22483-01″

[root@mc-rocky01 ~]#

Time to configure bonding (AKA teaming) to retrofit it into the mix for some HA over the 4 NIC’s. As before, since the network configuration will be adjusted, connectivity will be lost. It’s a good idea to have the console handy: Before doing anything, remove all the configurations (Don’t worry about KVM, it will begin to work again once we redefine br01):

# nmcli c

# nmcli c delete br0

# nmcli c delete bridge-slave-enp2s0f0

There should be nothing defined:

# nmcli c

NAME UUID TYPE DEVICE

and the /etc/sysconfig/network-scripts folder should be empty. Next, let’s define the bonding interfaces based on the previous configuration above. The earlier commands:

# nmcli connection add type bond con-name bond0 ifname bond0 bond.options “mode=active-backup,miimon=100” ipv4.method disabled ipv6.method ignore

OR

# nmcli con add type bond con-name bond0 ifname bond0 mode active-backup ipv4.method disabled ipv6.method ignore ipv4.addresses 10.3.0.10/24 ipv4.gateway 10.3.0.1 ipv4.dns “192.168.0.46 192.168.0.51 192.168.0.224” ipv4.method manual

OR

# nmcli con add type bond con-name bond0 ifname bond0 mode active-backup ipv6.method ignore ipv4.addresses 10.3.0.10/24 ipv4.gateway 10.3.0.1 ipv4.dns “192.168.0.46 192.168.0.51 192.168.0.224” ipv4.method manual

# nmcli con add type bond-slave con-name enp2s0f0 ifname enp2s0f0 master bond0

# nmcli con add type bond-slave con-name enp2s0f1 ifname enp2s0f1 master bond0

# nmcli con add type bond-slave con-name enp3s0f0 ifname enp3s0f0 master bond0

# nmcli con add type bond-slave con-name enp3s0f1 ifname enp3s0f1 master bond0

NOTE: There is no IP assignment above. Not needed. That will go on the br01 interface as before. Activate the connection:

# nmcli c up ifcfg-enp2s0f0

# nmcli c up ifcfg-enp2s0f0

# nmcli c up ifcfg-enp2s0f0

# nmcli c up ifcfg-enp2s0f0

Activate the bond0 interface:

# nmcli con up bond0

Next, reestablish the bridge interface. IMPORTANT NOTE: This time the bond0 is added, not the individual physical NIC:

# virsh net-list –all

# nmcli con add ifname br0 type bridge con-name br0 ipv4.addresses 10.3.0.10/24 ipv4.gateway 10.3.0.1 ipv4.dns “192.168.0.46 192.168.0.51 192.168.0.224” ipv4.method manual

Bridges need all interfaces to be added. NOTE: bond0 of type bridge is incompatible it appears:

# nmcli con add type bridge-slave ifname bond0 master br0

# nmcli con add type bridge-slave ifname enp2s0f0 master br0

# nmcli con add type bridge-slave ifname enp2s0f1 master br0

# nmcli con add type bridge-slave ifname enp3s0f0 master br0

# nmcli con add type bridge-slave ifname enp3s0f1 master br0

# nmcli c up bridge-slave-enp2s0f0

# nmcli c up bridge-slave-enp2s0f1

# nmcli c up bridge-slave-enp3s0f0

# nmcli c up bridge-slave-enp3s0f1

# (optional, not working) nmci c add type vlan con-name vlan0 ifname bond0.0 dev bond0 id 0 master br0 slave-type bridge

# nmcli c s

Test by starting the virtual machine defined earlier:

virsh # start mc-rocky01.nix.mds.xyz

Domain ‘mc-rocky01.nix.mds.xyz’ started

virsh #

Then ping the physical host:

C:\Users\tom>ping 10.3.0.10 -t

Pinging 10.3.0.10 with 32 bytes of data:

Reply from 10.3.0.10: bytes=32 time=1ms TTL=62

Reply from 10.3.0.10: bytes=32 time=1ms TTL=62

And ping the KVM VM as well:

C:\Users\tom>ping 10.3.0.179 -t

Pinging 10.3.0.179 with 32 bytes of data:

Reply from 10.3.0.179: bytes=32 time=1ms TTL=62

Reply from 10.3.0.179: bytes=32 time=1ms TTL=62

After all is said and done, the interfaces:

[root@dl380g6-p02 ~]# nmcli c

NAME UUID TYPE DEVICE

br0 a080d0c1-3828-4595-b08f-ed6854354660 bridge br0

bond0 ca5a28e1-6bb0-4f43-b2b3-73af73fb877f bond bond0

virbr0 19b49e05-eea8-43ce-be68-a52e50c774b0 bridge virbr0

vnet0 05bb4b3f-834a-4a07-b9bf-a3cf22ad5d76 tun vnet0

bridge-slave-enp2s0f0 7a6300eb-7cbe-4629-97af-a49adc2c15a9 ethernet enp2s0f0

bridge-slave-enp2s0f1 1eebfee8-b27d-4579-a605-768282c579cd ethernet enp2s0f1

bridge-slave-enp3s0f0 a5b96f1f-0d1f-4773-b2fe-243c382aa51b ethernet enp3s0f0

bridge-slave-enp3s0f1 a38f12f8-c7af-4047-b024-3d15cadc7eda ethernet enp3s0f1

bridge-slave-bond0 97b2404f-a056-452b-a081-d27f7645fb75 ethernet —

enp2s0f0 750293af-2d1d-4d18-bd75-4b29324afd10 ethernet —

enp2s0f1 c8261b81-f318-4114-817e-77019d6ff404 ethernet —

enp3s0f0 09be1a9d-b9f4-4748-8a0a-4793a9426245 ethernet —

enp3s0f1 b1a8b75e-abec-4488-8f15-d576a4048ed9 ethernet —

Use the following command to test failover capability:

# ip link set dev enp2s0f0 down

# ip link set dev enp2s0f1 down

# ip link set dev enp3s0f0 down

# ip link set dev enp3s0f1 down

# ip link set dev enp2s0f0 up

# ip link set dev enp2s0f1 up

# ip link set dev enp3s0f0 up

# ip link set dev enp3s0f1 up

As noted above, bond interfaces appear to be incompatible with bridges in Rocky 8+ / RHEL 8+ / CentOS 8+ whereas for RHEL 7 clones, it’s sufficient to add the bond0 to br0:

You’re now set with bonding and redundancy on the KVM side!

COMING UP!

UI and Cockpit installation (Plus any other goodies I’ll think of before completing this post)

Cheers,

TK

REF: RHEL7: https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/7/html/networking_guide/sec-vlan_on_bond_and_bridge_using_the_networkmanager_command_line_tool_nmcli

REF: RHEL 8: https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/8/html/configuring_and_managing_networking/configuring-a-network-bridge_configuring-and-managing-networking#configuring-a-network-bridge-using-nmcli-commands_configuring-a-network-bridge